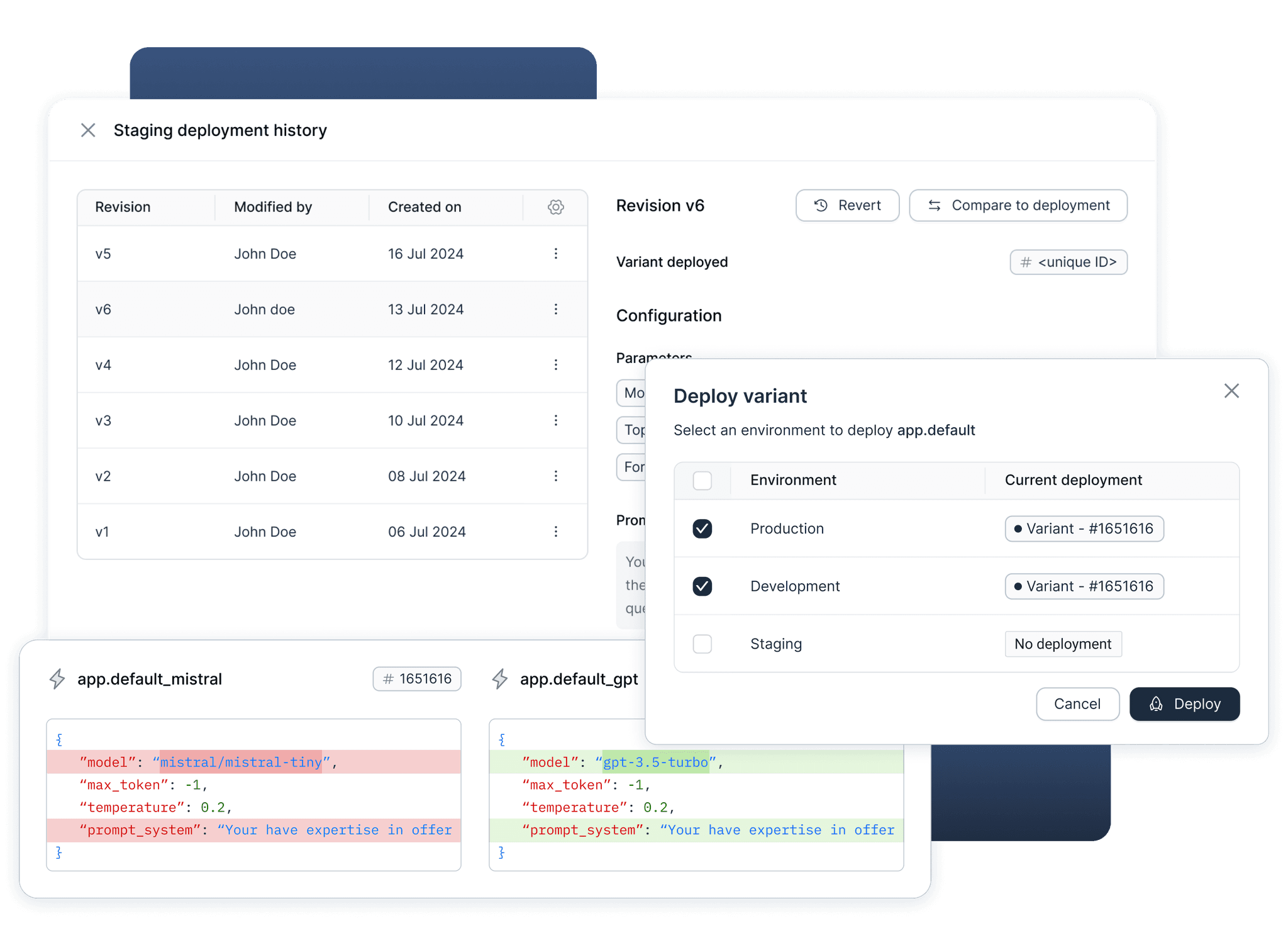

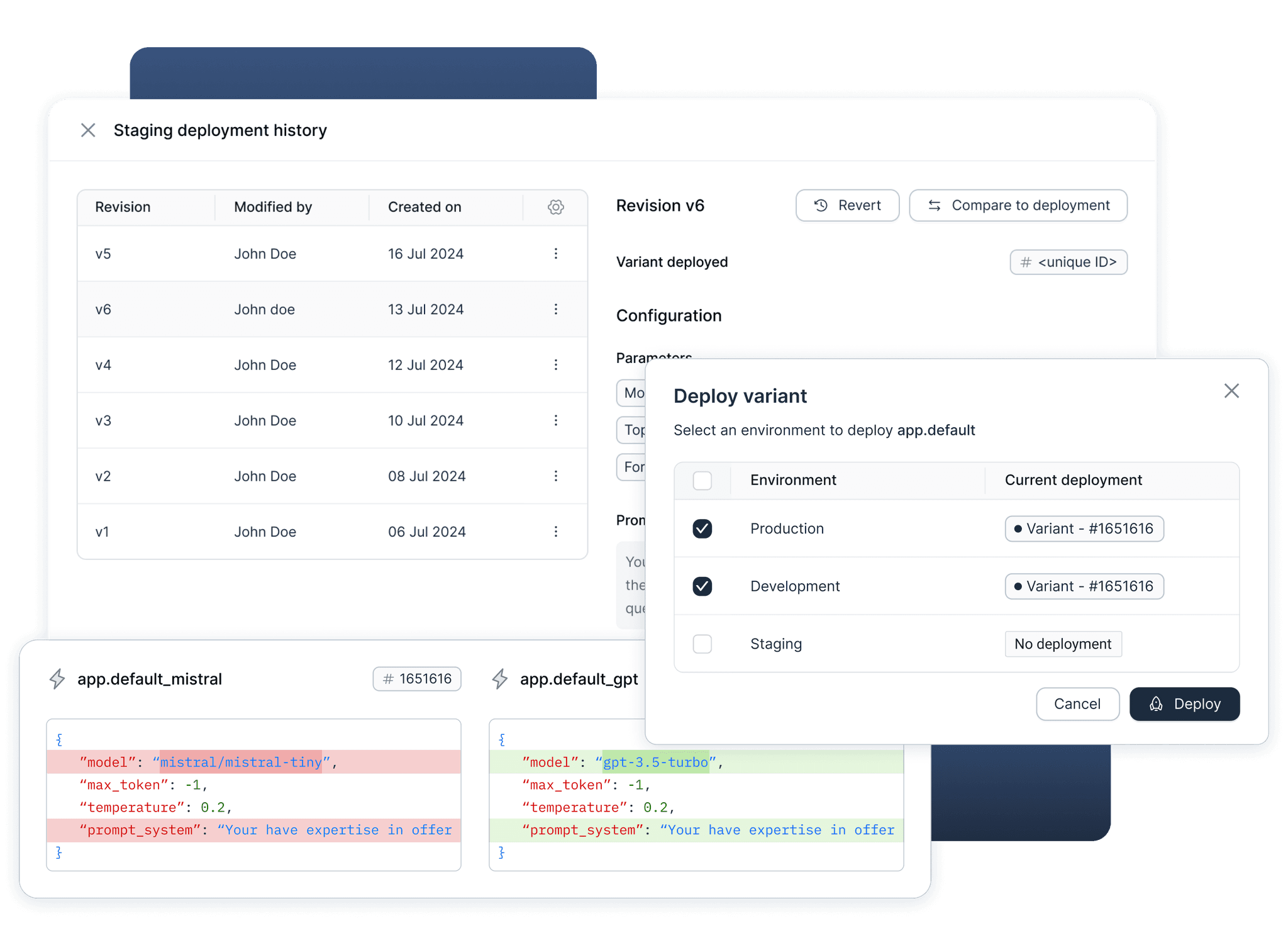

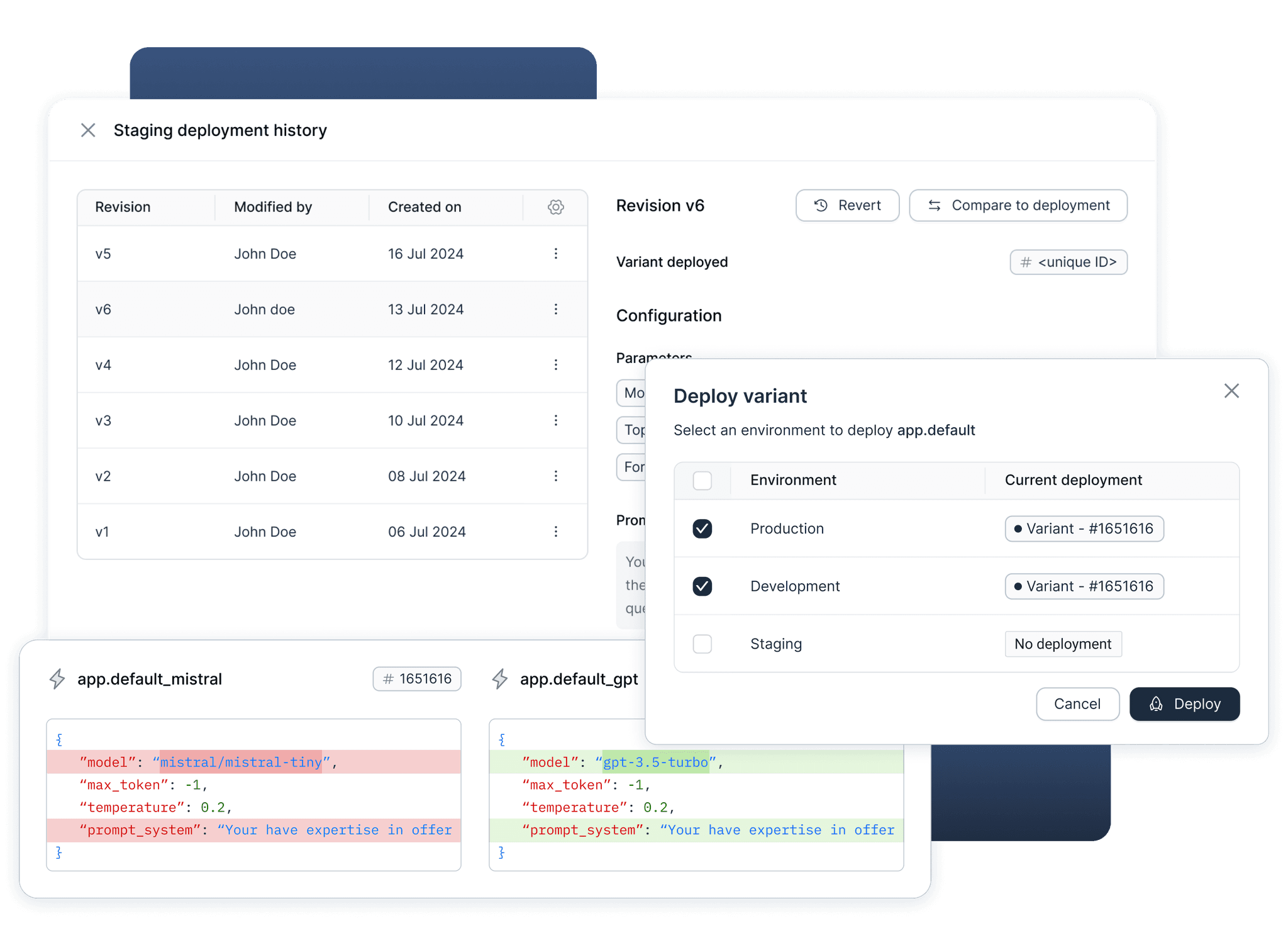

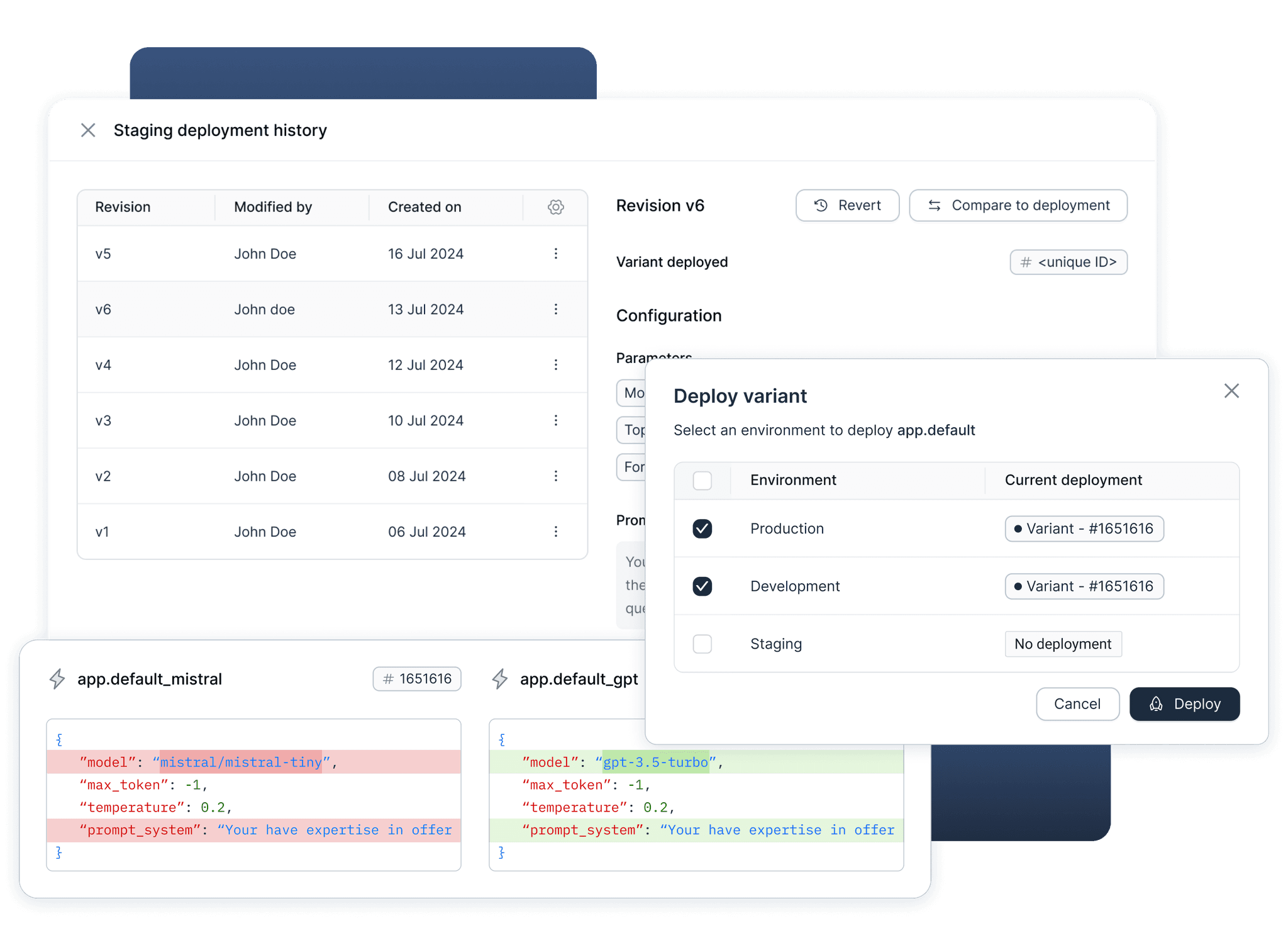

PROMPT REGISTRY

Version and Collaborate on Prompts

-

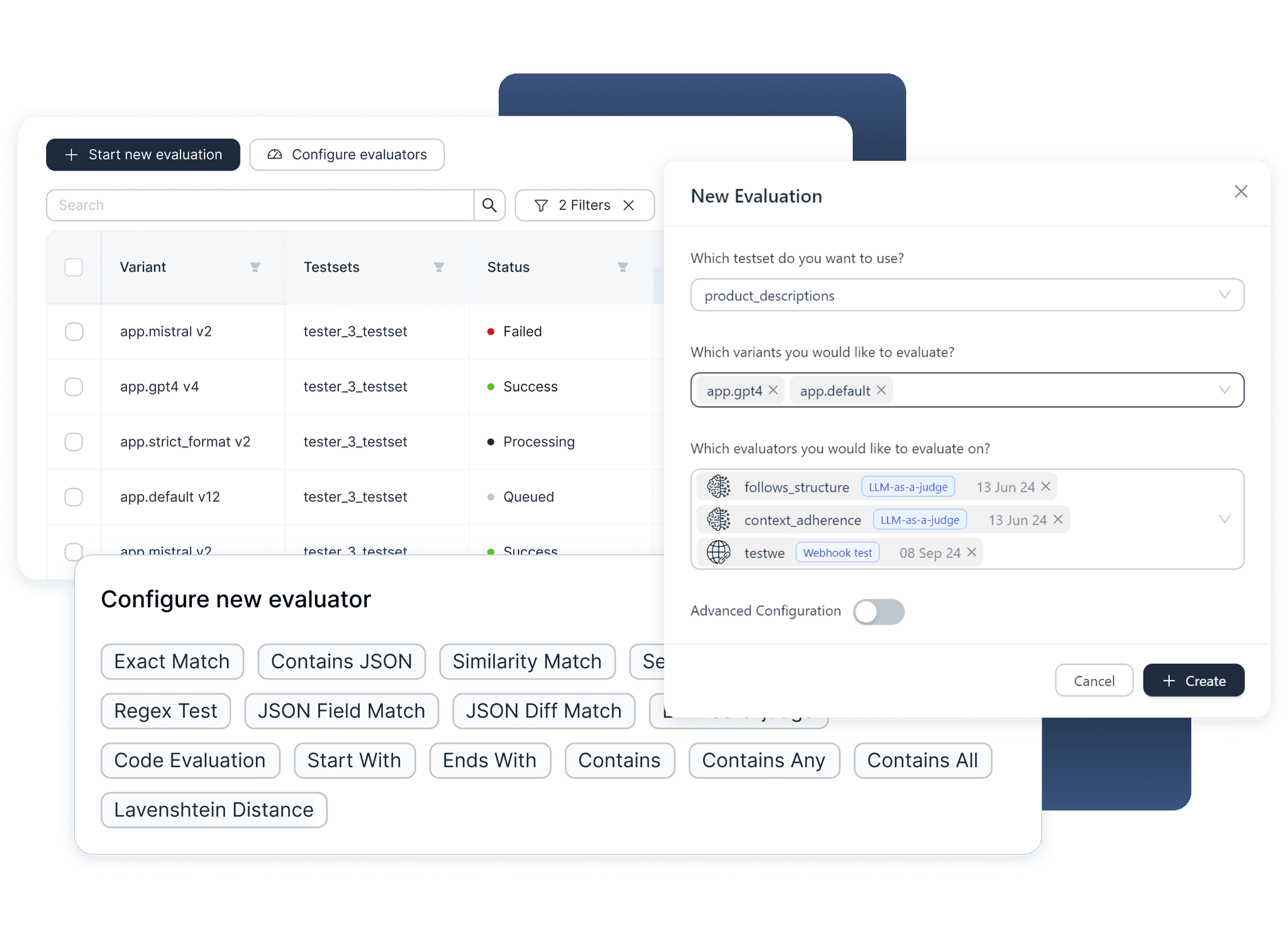

Track prompt versions and their outputs

-

Easily deploy to production and rollback

-

Link prompts to their evaluations and traces

Use best practices to manage your prompts throughout their lifecycle. Systematically version your prompts and move them to production with a reliable record-keeping system. Easily connect all related information—including evaluations and traces— to your prompts.

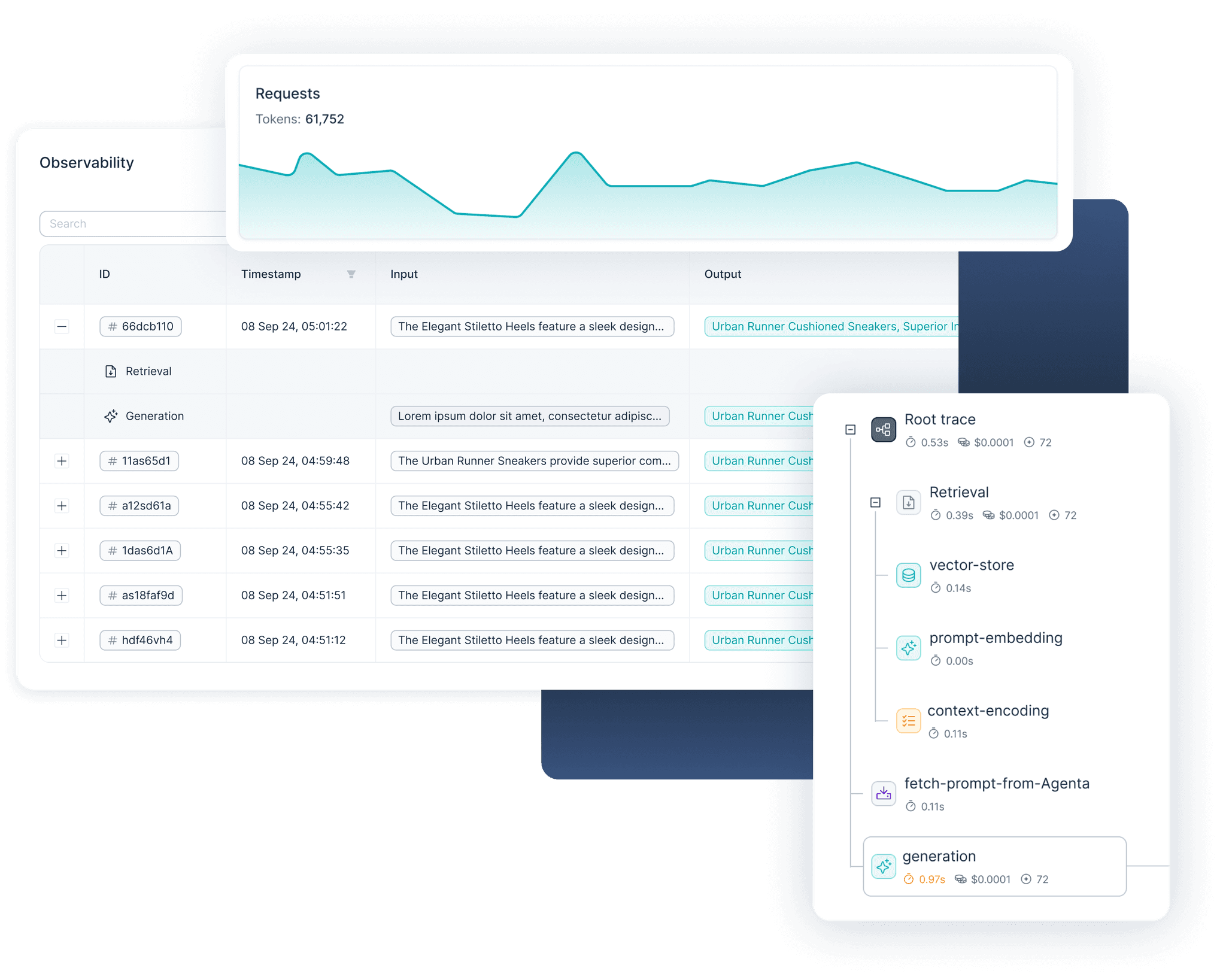

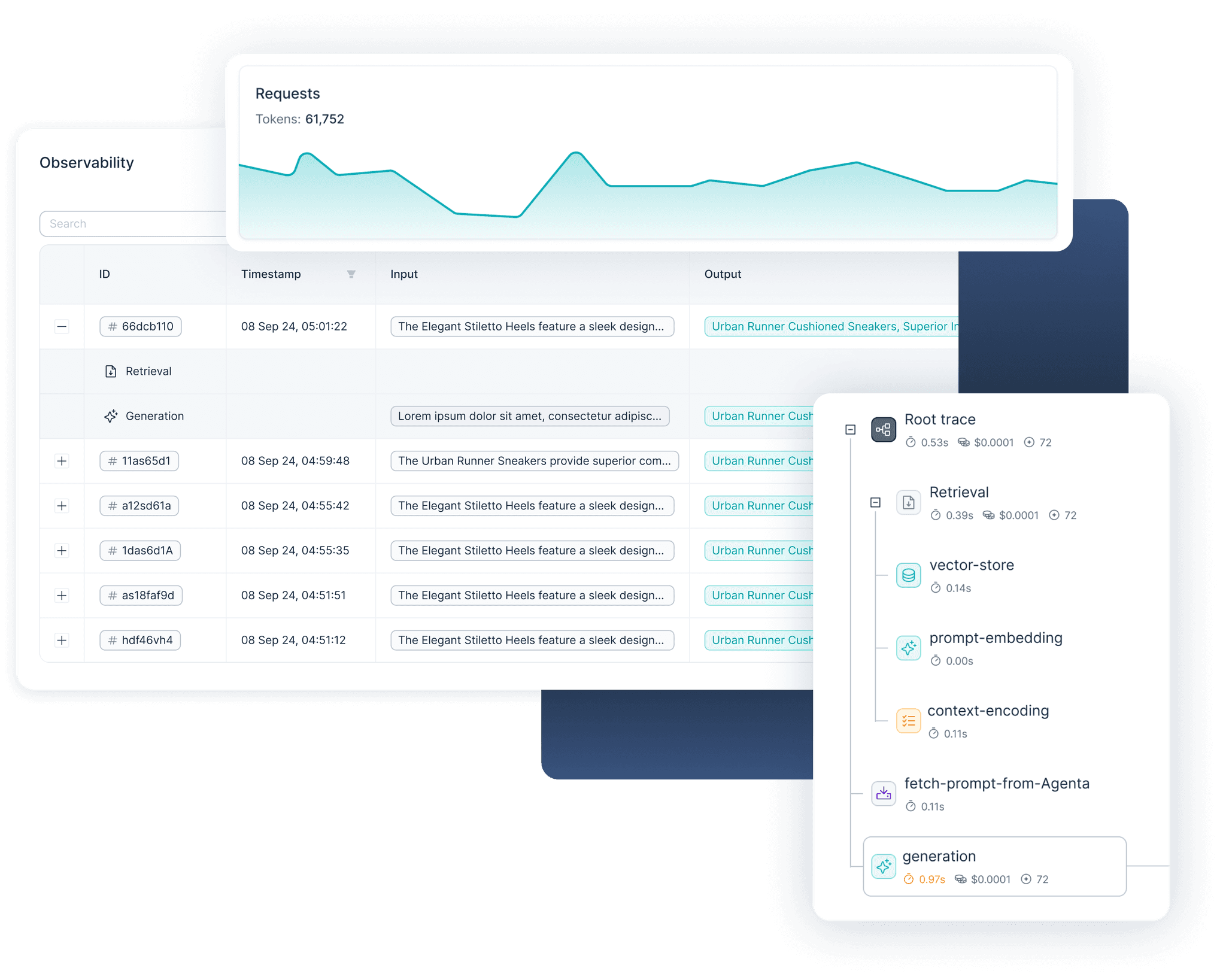

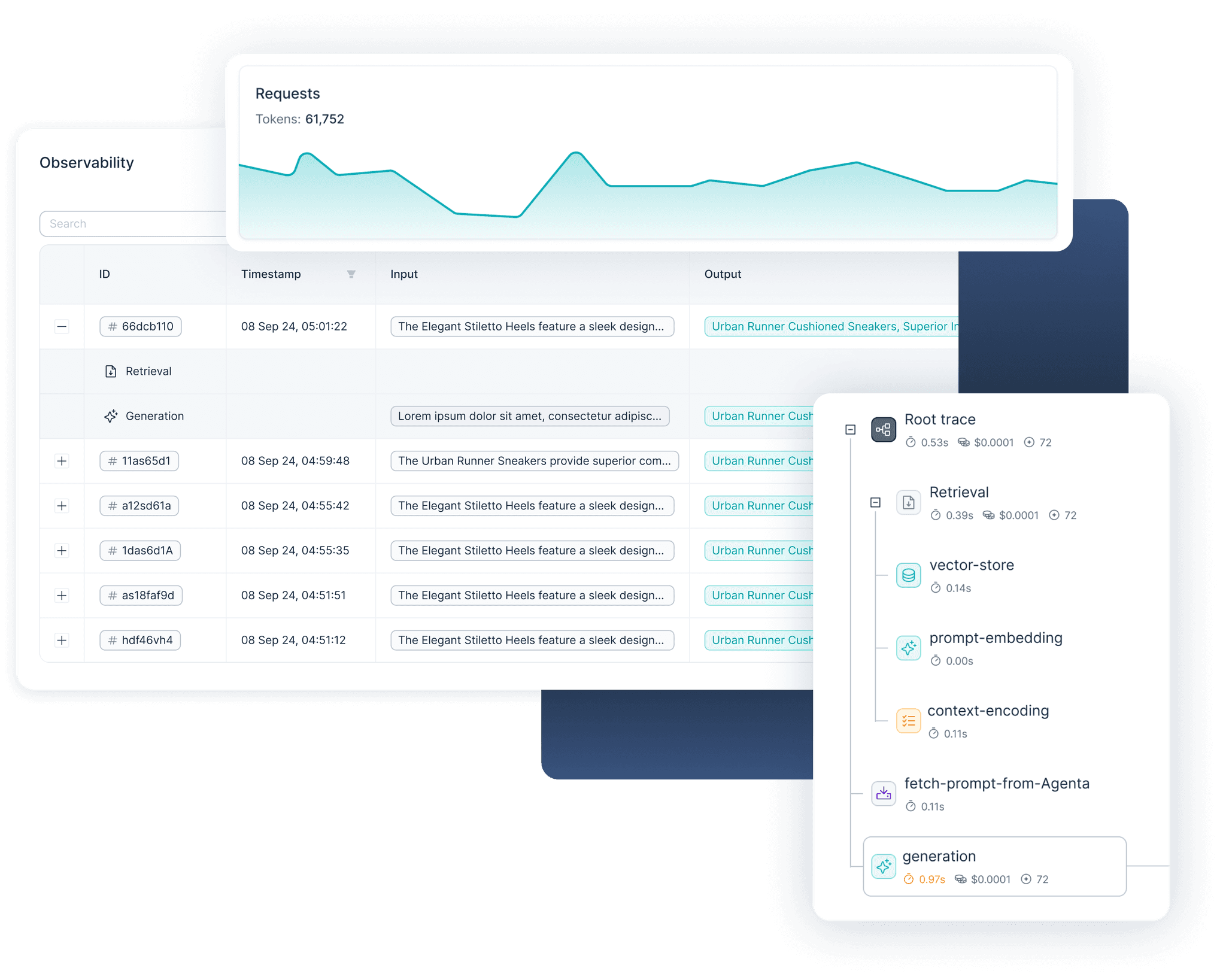

OBSERVABILITY

Trace and Debug

Trace and Debug

-

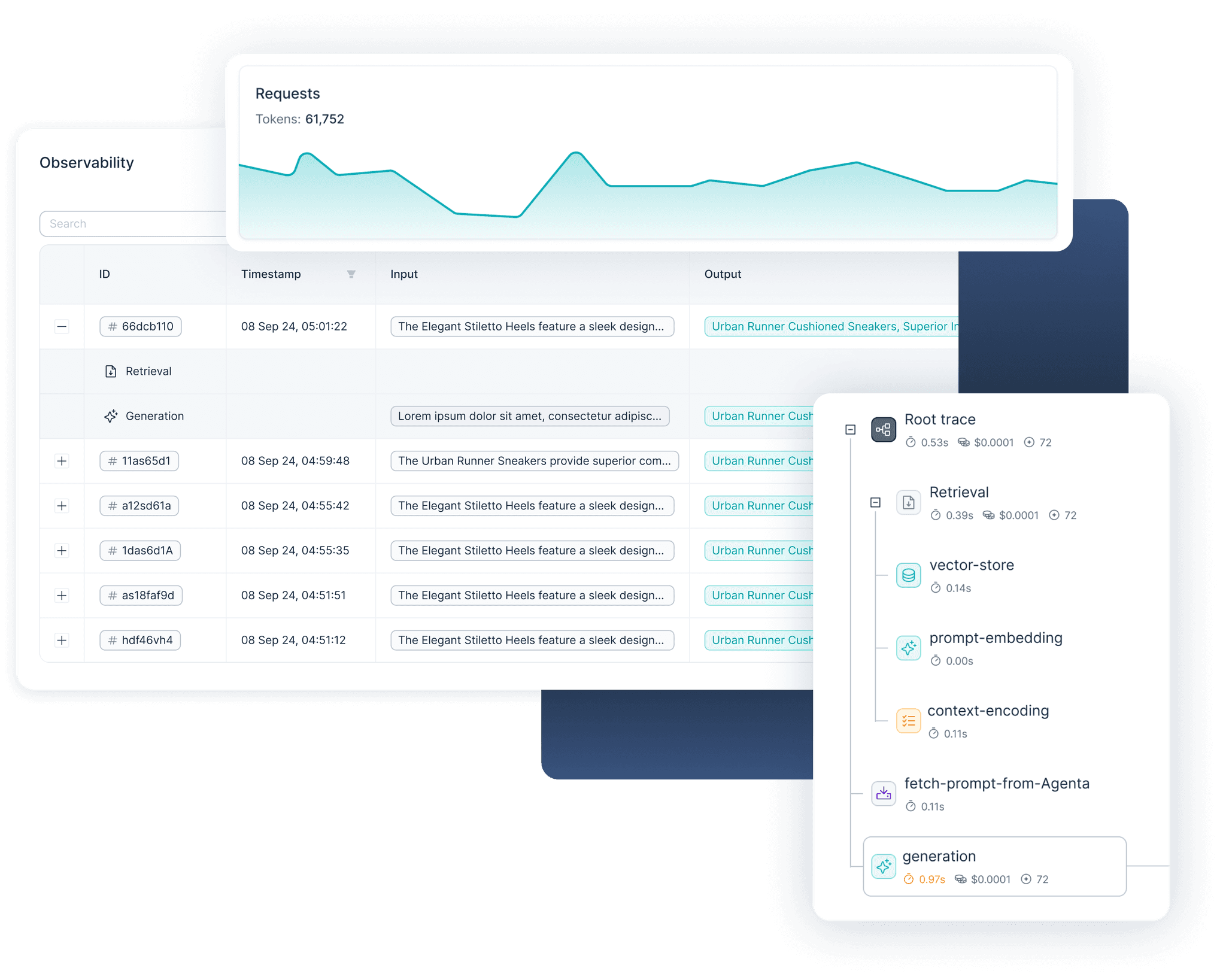

Debug outputs and identify root causes

-

Identify edge cases and curate golden sets

-

Monitor usage and quality

-

Monitor usage of your application and use traces to continuously improve accuracy.

-

Find edge cases and use them to curate golden sets.

-

Debug challenging inputs and find root causes.

NEED HELP?

Frequently

asked questions

Frequently

asked questions

Create robust LLM apps in record time. Focus on your core business logic and leave the rest to us.

What is Lexica?

Can I use Lexica with a self-hosted fine-tuned model such as Llama or Falcon?

How can I limit hallucinations and improve the accuracy of my LLM apps?

Is it possible to use vector embeddings and retrieval-augmented generation with Lexica?

What is Lexica?

Can I use Lexica with a self-hosted fine-tuned model such as Llama or Falcon?

How can I limit hallucinations and improve the accuracy of my LLM apps?

Is it possible to use vector embeddings and retrieval-augmented generation with Lexica?

What is Lexica?

Can I use Lexica with a self-hosted fine-tuned model such as Llama or Falcon?

How can I limit hallucinations and improve the accuracy of my LLM apps?

Is it possible to use vector embeddings and retrieval-augmented generation with Lexica?

What is Lexica?

Can I use Lexica with a self-hosted fine-tuned model such as Llama or Falcon?

How can I limit hallucinations and improve the accuracy of my LLM apps?

Is it possible to use vector embeddings and retrieval-augmented generation with Lexica?

Need a demo?

We are more than happy to give a free demo

Copyright © 2023-2060 Lexicatech UG (haftungsbeschränkt)

Need a demo?

We are more than happy to give a free demo

Copyright © 2023-2060 Lexicatech UG (haftungsbeschränkt)

Need a demo?

We are more than happy to give a free demo

Copyright © 2023-2060 Lexicatech UG (haftungsbeschränkt)